“…And that is why Acme Corp has an Account Score of 94”

Why is account scoring blowing up?

The world of account data has exploded in recent years. In RevOps, we now have access to intent signals, technographic data, departmental information…the list goes on. These data describing prospects and customers present exciting opportunities. The more that we know about accounts, the better we can prioritize them and divvy them up amongst sellers. A simple way to achieve this is by using an account scoring system. An account scoring system takes different attributes and indicators about an account, assigns a numerical value to each, and weighs them to develop a score indicating the quality of that account. The results might lead us to find, for instance, that Acme Corp scores a 94 out of 100 and is an ideal account to target. Meanwhile, Pied Piper scores a 52 out of 100 so let’s not waste valuable time and resources targeting it.

How is Account Scoring being used today in territory design?

When designing territories, account scoring can be a useful tool for balancing and grading. By understanding how ‘good’ an account is, RevOps can more adeptly balance accounts between reps and plan capacity needs. We need to make sure each rep has enough good accounts to meet their performance goals and isn’t wasting time on low scoring accounts. Easy peasy. But when we talk to companies, satisfaction around account scores is very low. In fact 65% of RevOps pros that we surveyed (500 ppl) were not satisfied with their company’s account scoring system. But - more importantly - 76% of the sellers and sales leaders that we surveyed (300 ppl) took issue with account scores.

So if we have this new wave of exciting data and it can help our teams to better prioritize accounts, why is the satisfaction so low? We take a look at some of the common pitfalls below:

Pitfalls and how to avoid them

The Score is not trusted by Sales or RevOps

Data inaccuracies

We talked to a number of companies that called BS on their own score. They were dismissive and many treated it as entirely wrong instead of incomplete or imperfect. The #1 reason behind this attitude was assumed data quality issues. Sellers and RevOps have first hand looks at - and battle scars from - the problems with the new treasure troves of data. Account attributes like technographics, micro-industry, and previous users are all useful…when the data are accurate. But the survey participants were extreme skeptics about the accuracy of these data and therefore assumed that the resulting account score was flawed.

Black box scoring

This skepticism was exacerbated because the calculation of the score was a ‘black box.’ Sellers assumed that lower level RevOps analysts were in charge of the process and didn’t understand the product/market fit that the score was trying to indicate. When we investigated further, we found that sellers were right to feel left out. Most account scores were earnest endeavors, but few companies did a good job of publishing the process and establishing a feedback loop with sales & product.

Lack of consistency

Trust (and use) of account scores faded as the year went on. Few companies from our survey were able to capture the dynamic nature of today’s account data landscape and implement it into their business. Account scores were relevant during planning season when accounts were initially distributed. After this, they were never heard from again until the next year.

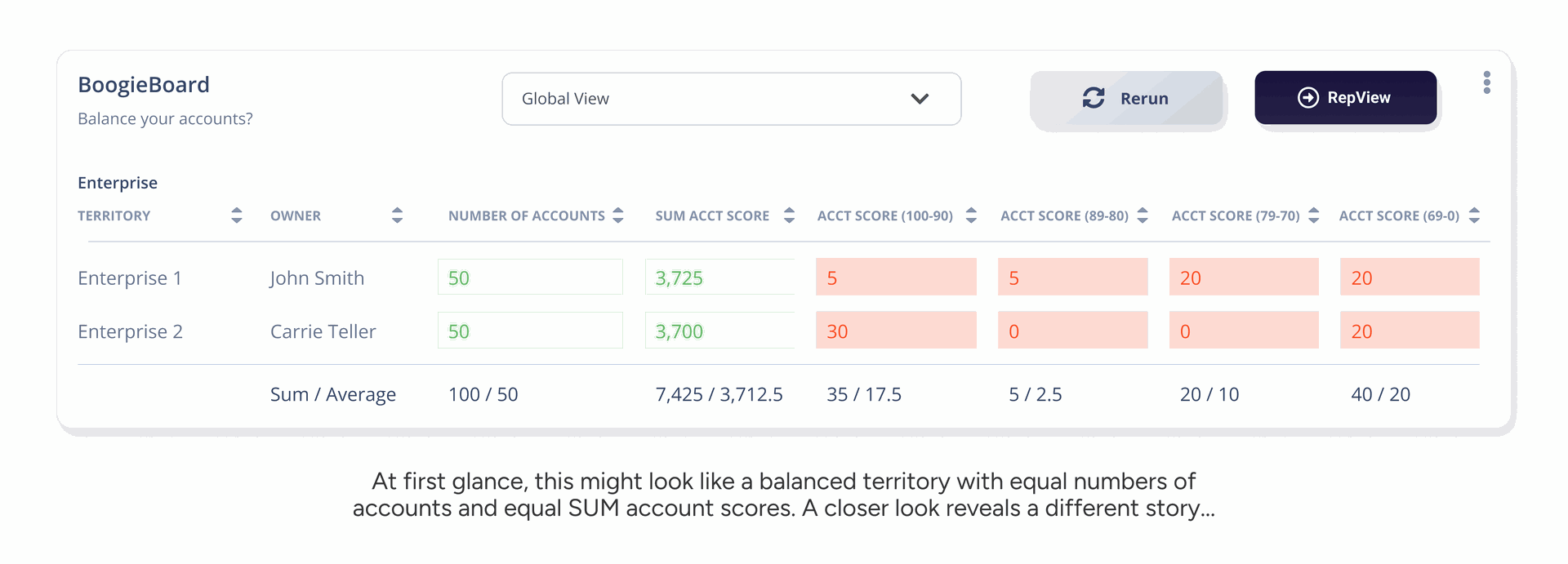

Using SUM of total account score

Many of the territories or books of business that we reviewed made the mistake of summing the total account score for each rep or territory. This made it easier to get territories designed quickly, but did a poor job of balancing them. Outliers proved to be responsible for imbalance between territories. See how this can happen in the below example:

How to avoid:

Involve Sales Leaders, Marketing Leaders, Product Leaders, and select Sellers in the Account Scoring process

Create a feedback loop so that this collaboration in ongoing

Use bands when balancing territories

Check out this webinar from Go Nimbly and RevOps CoOp for best practices on data normalization

The Score doesn’t tell the account’s story

A Singular Score for Data Types

What does it mean that Acme Corp is scored as a 94 out of 100? Clearly it’s good, and sales should go after the account. This type of general account quality (or “fit”) is an important component of territory design. How many quality accounts a seller has helps determine territory equity, quota planning, and capacity planning. It also helps sellers to prioritize their time on the right accounts. But ‘94’ doesn’t tell the full story. For example, we don’t know which attributes about the account most indicate that it is of high quality. These star attributes should inform us how to attack the account, not just simply, to attack it. When the scoring related to certain attributes is wrong, it unnecessarily erodes seller trust in the entire score.In our survey, many reported making the mistake of rolling a ‘fit’ score and an ‘intent’ score into the same number. Intent is ever-changing and therefore needs to be updated throughout the year in real-time (ie. If someone visited our website in January, that should boost the intent score in Q1. But that boost should decay over time, as the action becomes less relevant). These companies were passing accounts to sellers because the account had shown ‘intent’, however, that intent was too far in the past to be an impactful (ie. Someone from account X attended one of our webinars two years ago).

A Singular Score Across Teams/Segments

Segments and customer profiles exist to define different types of customers for your business. So if there are different types of customers who require different sales motions, different messaging, and different products, they should not be defined by the same score. For example if we sell a product to a government-owned account and another for SaaS startups, the calculation and weighing of an account score should be different for each.

How to avoid:

Build distinct scores

ie. One for Intent Data and One for Firmographic Data

ie. One for Enterprise Segment, One for Government Segment

Publish a ‘How It’s Made’ document about the account score so that the entire company has visibility

Consider more simple scores if the precision is confusing

ie. Instead of a 100 point scale, just use Green, Yellow, Red

Instead of weighing all variables into a score, they could just be binary

Connect the score to the desired behavior

See here how Challenger (the company behind the Challenger Sale) introduced new account scoring here.

Account Scoring Guide

While we won’t go into depth on how to score accounts, here is a great step-by-step guide posted by Jim Gilkey, host of Account Based Beverages:

In Conclusion

Determining which accounts are good or bad and how they are good or bad can be really helpful in designing territories. It can provide clear prioritization and - when done correctly - communicate to sellers how they should act in their territories. But RevOps must avoid the pitfalls or the scoring can do more harm than good. It can erode trust, create imbalances, and confuse the Go to Market direction.